Meldy: a music generator

Matteo Bernardini, Yilin Zhu

{10743181,10702368}@mail.polimi.it

ACTAM & CMRM Project 2019-2020

Overview of the Project

Project Structure

Topics

- Computer Music

- Theoretical C.S.

- Computational Creativity

Resources

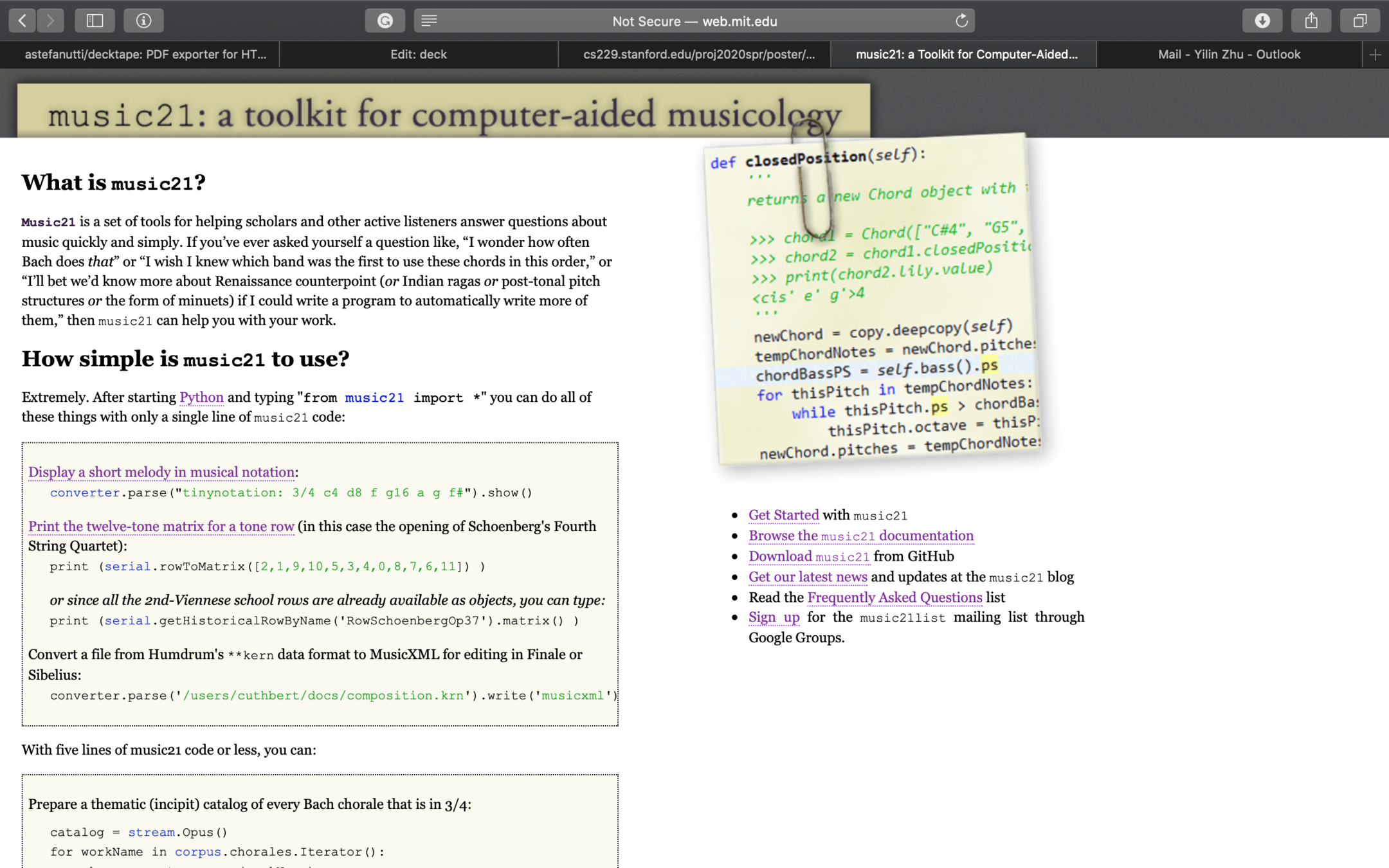

- music21: computer-aided musicology toolkit

- p5.js: web visualization

- OSMD: MusicXML rendering

- webpack: development

1) User Input

-

2D picker: strength of a mood

- implemented using p5.js

- Dimensional approach from MER

- x-axis: valence, in range [0, 1]

- y-axis: arousal, in range [0, 1]

Choose a mood as input

2) Melody Generation

2.1 Mapping from mood to music features

- valence & arousal

- higher valence -> brighter mode

- higher arousal -> higher pitch

- higher arousal -> faster tempo

2) Melody Generation

2.2 Time signature

- different moods -> different rhythmic patterns

- 4/4 : stability

- 3/4 : danceability

- odd time signatures (e.g. 7/8): tension and instabilities

We decided to ignore this aspect and simply stick on a 4/4, in order to have a better focus on the other main features and simplify the approach for durations generation.

2) Melody Generation

2.3 Key signature

- mode is decided before, we need a root note

- our approach: pick a random root within the possible 12 pitches of the cromatic scale

-

first problem: Not all possible combinations of root names and mode are theoretically valid

- usual names -> C C# D Eb E F F# G Ab A Bb B

-

second problem: these are usual names only for ionian mode

- pick random "base root" in ionian -> rotate to mode (e.g. base: C#, mode: lydian -> key: F# lydian)

2) Melody Generation

2.4 Melodic Sequence

- Base model: L-system (Formal Grammar)

$$ G = \{V, \omega, P\} $$ - \(P\) and \(\omega\) are stochastic

- Extended model: different rulesets and a selection parameter

$$ G = \{V,\omega,R,p\} \qquad R = \{ (P_1,s_1), (P_2,s_2),\cdots\} \qquad p \in \mathbb{R} $$ - Two separate grammars for generating:

- Sequence of pitches

- Sequence of durations

2) Melody Generation

2.4 Melodic Sequence

- \(p\) is used to select which \(P_i\) to use

- \(P_i\) is used for the grammar if \(|p-s_i|\) is the minimum difference among the defined rulesets

- This model introduces further variability over a basic L-system

2) Melody Generation

2.5 Pitches

-

mood from input -> Key signature

- use relative degrees instead of actual pitches

- we can do the transposition later

-

valence influences the melody (i.e. p=valence)

- positive -> uplifting

- negative -> downwards

-

vary between two rulesets:

- higher valence (s=1)

higher probabilities to be uplifting, create consonant melodic intervals. - lower valence (s=0)

downwards, dissonant melodic intervals

- higher valence (s=1)

2) Melody Generation

2.6 Durations

-

simplify the task:

- stick to 4/4

- define durations with "1" = "quarter note"

-

vary between 3 rulesets (p=arousal):

- higher arousal (s=1)

add faster notes (8ths and 16ths) and syncopations - middle arousal (s=0.5)

remove faster notes and syncopations - low arousal (s=0)

simple and straight rhythms

- higher arousal (s=1)

2) Melody Generation

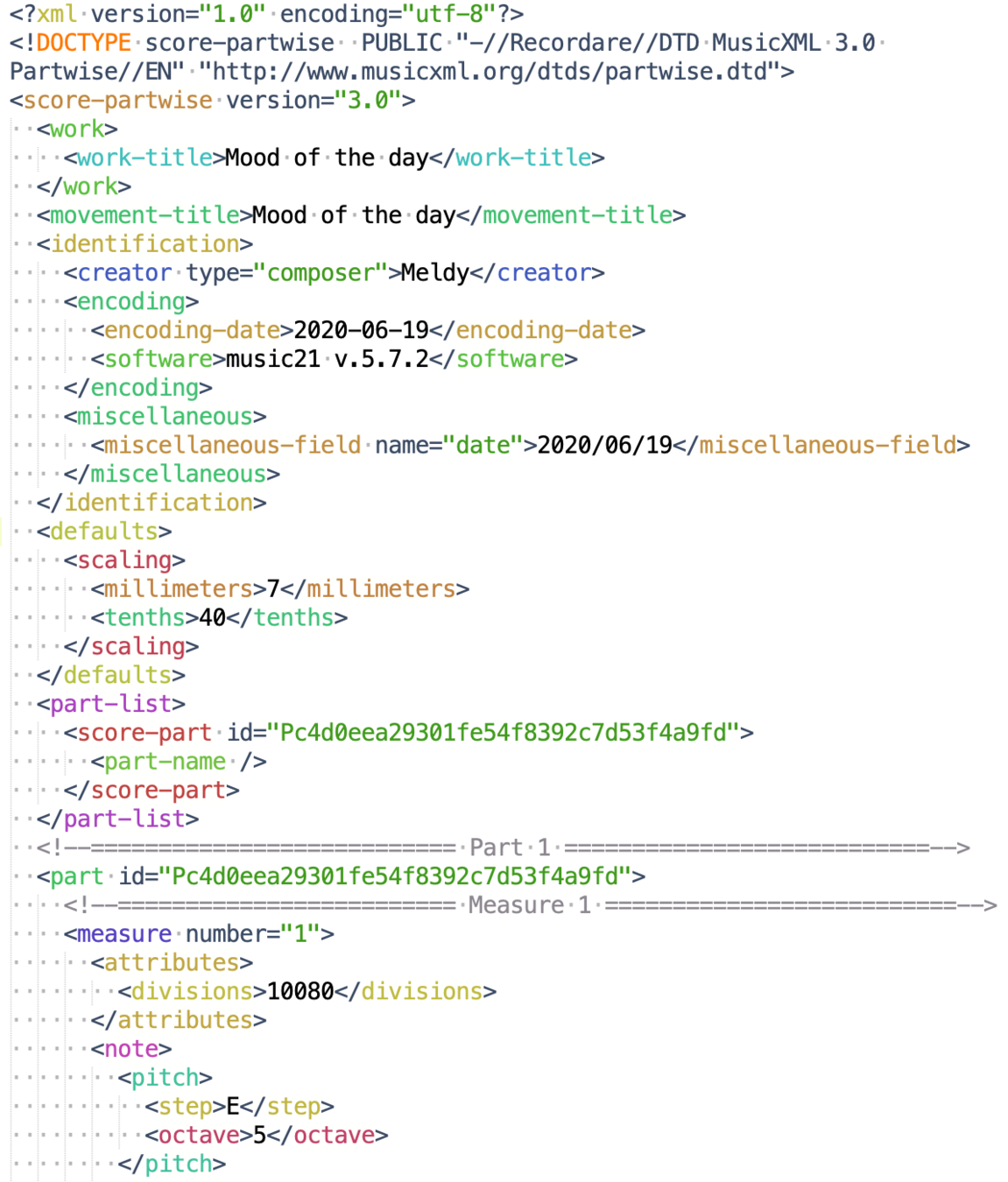

2.7 Output format

- MusicXML: aimed to music representation, better suited than MIDI

- Natively supported by music21

3) Resources

- First attempt: music21j

- not mature enough, several bugs

- not mature enough, several bugs

- Conclusion: music21 (python version)

- back-end needed for this step

3.1 Musicology Tools: Music21

3) Resources

3.2 Development Pipeline

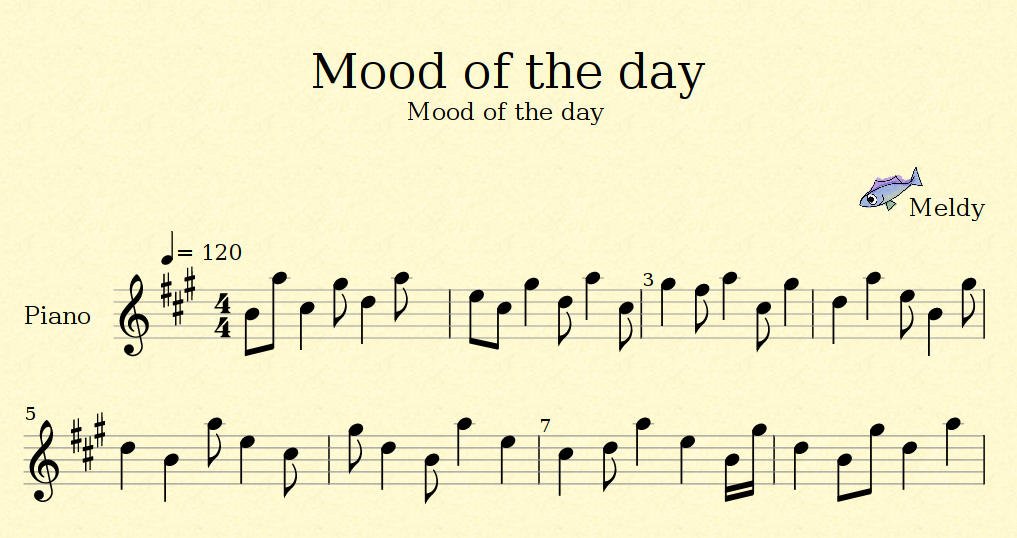

4) Music Rendering

- User clicks the Impress Me button → view switch

- Rendering MusicXML to Music Notation

- OpenSheetMusicDisplay: TypeScript Library

- output as SVG, rendered natively by browser

4.1 Show Sheet Music

4) Music Rendering

- Play → listen to the generated music

- Download → save MusicXML (open with Finale, Sybelius, ...)

- Restart → go back to the mood selection view

4.2 Play and listen to it

Future Works

- Enhance grammar model accuracy

* try to apply some evaluation or reward function - From single melodic paragraph to multi-paragraphs

- Introduce different musical forms (e.g. sonata)

- Other music elements (e.g. different time signature)

How can we improve this project

Reference & Links

Thanks for your attention!

-

McCormack J. Grammar based music composition[J]. Complex systems, 1996, 96: 321-336.

-

music21 Reference Documentation

-

webpack Documentation